by Valluru B. Rao

M&T Books, IDG Books Worldwide, Inc.

ISBN: 1558515526 Pub Date: 06/01/95

|

C++ Neural Networks and Fuzzy Logic

by Valluru B. Rao M&T Books, IDG Books Worldwide, Inc. ISBN: 1558515526 Pub Date: 06/01/95 |

| Previous | Table of Contents | Next |

You need to place the first 200 lines in a training.dat file (provided for you in the accompanying diskette) and the subsequent 40 lines of data in the another test.dat file for use in testing. You will read more about this shortly. There is also more data than this provided on this diskette in raw form for you to do further experiments.

With the training data available, we set up a simulation. The number of inputs are 15, and the number of outputs is 1. A total of three layers are used with a middle layer of size 5. This number should be made as small as possible with acceptable results. The optimum sizes and number of layers can only be found by much trial and error. After each run, you can look at the error from the training set and from the test set.

You obtain the error for the test set by running the simulator in Training mode (you need to temporarily copy the test data with expected outputs to the training.dat file) for one cycle with weights loaded from the weights file. Since this is the last and only cycle, weights do not get modified, and you can get a reading of the average error. Refer to Chapter 13 for more information on the simulator’s Test and Training modes. This approach has been taken with five runs of the simulator for 500 cycles each. Table 14.5 summarizes the results along with the parameters used. The error gets better and better with each run up to run # 4. For run #5, the training set error decreases, but the test set error increases, indicating the onset of memorization. Run # 4 is used for the final network results, showing test set RMS error of 13.9% and training set error of 6.9%.

| Run# | Tolerance | Beta | Alpha | NF | max cycles | cycles run | training set error | test set error |

|---|---|---|---|---|---|---|---|---|

| 1 | 0.001 | 0.5 | 0.001 | 0.0005 | 500 | 500 | 0.150938 | 0.25429 |

| 2 | 0.001 | 0.4 | 0.001 | 0.0005 | 500 | 500 | 0.114948 | 0.185828 |

| 3 | 0.001 | 0.3 | 0 | 0 | 500 | 500 | 0.0936422 | 0.148541 |

| 4 | 0.001 | 0.2 | 0 | 0 | 500 | 500 | 0.068976 | 0.139230 |

| 5 | 0.001 | 0.1 | 0 | 0 | 500 | 500 | 0.0621412 | 0.143430 |

NOTE: If you find the test set error does not decrease much, whereas the training set error continues to make substantial progress, then this means that memorization is starting to set in (run#5 in example). It is important to monitor the test set(s) that you are using while you are training to make sure that good, generalized learning is occurring versus memorizing or overfitting the data. In the case shown, the test set error continued to improve until run#5, where the test set error degraded. You need to revisit the 12-step process to forecasting model design to make any further improvements beyond what was achieved.

To see the exact correlation, you can copy any period you’d like, with the expected value output fields deleted, to the test.dat file. Then you run the simulator in Test mode and get the output value from the simulator for each input vector. You can then compare this with the expected value in your training set or test set.

Now that you’re done, you need to un-normalize the data back to get the answer in terms of the change in the S&P 500 index. What you’ve accomplished is a way in which you can get data from a financial newspaper, like Barron’s or Investor’s Business Daily, and feed the current week’s data into your trained neural network to get a prediction of what the S&P 500 index is likely to do ten weeks from now.

Here are the steps to un-normalize:

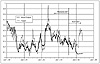

This is only a very brief illustration (not meant for trading !) of what you can do with neural networks in financial forecasting. You need to further analyze the data, provide more predictive indicators, and optimize/redesign your neural network architecture to get better generalization and lower error. You need to present many, many more test cases representing different market conditions to have a robust predictor that can be traded with. A graph of the expected and predicted output for the test set and the training set is shown in Figure 14.6. Here, the normalized values are used for the output. Note that the error is about 13.9% on average over the test set and 6.9% over the training set. You can see that the test set did well in the beginning, but showed great divergence in the last few weeks.

Figure 14.6 Comparison of predicted versus actual for the training and test data sets.

The preprocessing steps shown in this chapter should serve as one example of the kinds of steps you can use. There are a vast variety of analysis and statistical methods that can be used in preprocessing. For applying fuzzy data, you can use a program like the fuzzifier program that was developed in Chapter 3 to preprocess some of the data.

There are many other experiments you can do from here on. The example chosen was in the field of financial forecasting. But you could certainly try the simulator on other problems like sales forecasting or perhaps even weather forecasting. The key to all of the applications though, is how you present and enhance data, and working through parameter selection by trial and error. Before concluding this chapter, we will cover more topics in preprocessing and present some case studies in financial forecasting. You should consider the suggestions made in the 12-step approach to forecasting model design and research some of the resources listed at the end of this chapter if you have more interest in this area.

| Previous | Table of Contents | Next |